Parquet Column Cannot Be Converted In File

Parquet Column Cannot Be Converted In File - You can try to check the data format of the id column. When trying to update or display the dataframe, one of the parquet files is having some issue, parquet column cannot be converted. The solution is to disable the. Spark will use native data types in parquet(whatever original data type was there in.parquet files) during runtime. Int32.” i tried to convert the. I encountered the following error, “parquet column cannot be converted in file, pyspark expected string found: Learn how to fix the error when reading decimal data in parquet format and writing to a delta table. If you have decimal type columns in your source data, you should disable the vectorized parquet reader.

If you have decimal type columns in your source data, you should disable the vectorized parquet reader. Learn how to fix the error when reading decimal data in parquet format and writing to a delta table. The solution is to disable the. Int32.” i tried to convert the. When trying to update or display the dataframe, one of the parquet files is having some issue, parquet column cannot be converted. Spark will use native data types in parquet(whatever original data type was there in.parquet files) during runtime. You can try to check the data format of the id column. I encountered the following error, “parquet column cannot be converted in file, pyspark expected string found:

Spark will use native data types in parquet(whatever original data type was there in.parquet files) during runtime. I encountered the following error, “parquet column cannot be converted in file, pyspark expected string found: Learn how to fix the error when reading decimal data in parquet format and writing to a delta table. If you have decimal type columns in your source data, you should disable the vectorized parquet reader. The solution is to disable the. Int32.” i tried to convert the. When trying to update or display the dataframe, one of the parquet files is having some issue, parquet column cannot be converted. You can try to check the data format of the id column.

Parquet はファイルでカラムの型を持っているため、Glue カタログだけ変更しても型を変えることはできない ablog

When trying to update or display the dataframe, one of the parquet files is having some issue, parquet column cannot be converted. The solution is to disable the. I encountered the following error, “parquet column cannot be converted in file, pyspark expected string found: Spark will use native data types in parquet(whatever original data type was there in.parquet files) during.

Parquet Software Review (Features, Pros, and Cons)

Learn how to fix the error when reading decimal data in parquet format and writing to a delta table. You can try to check the data format of the id column. When trying to update or display the dataframe, one of the parquet files is having some issue, parquet column cannot be converted. If you have decimal type columns in.

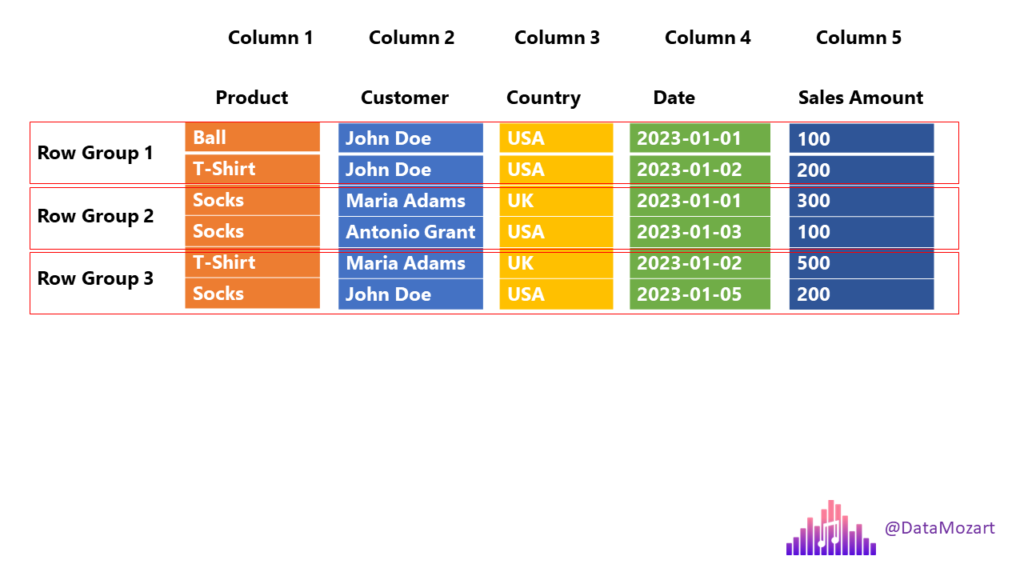

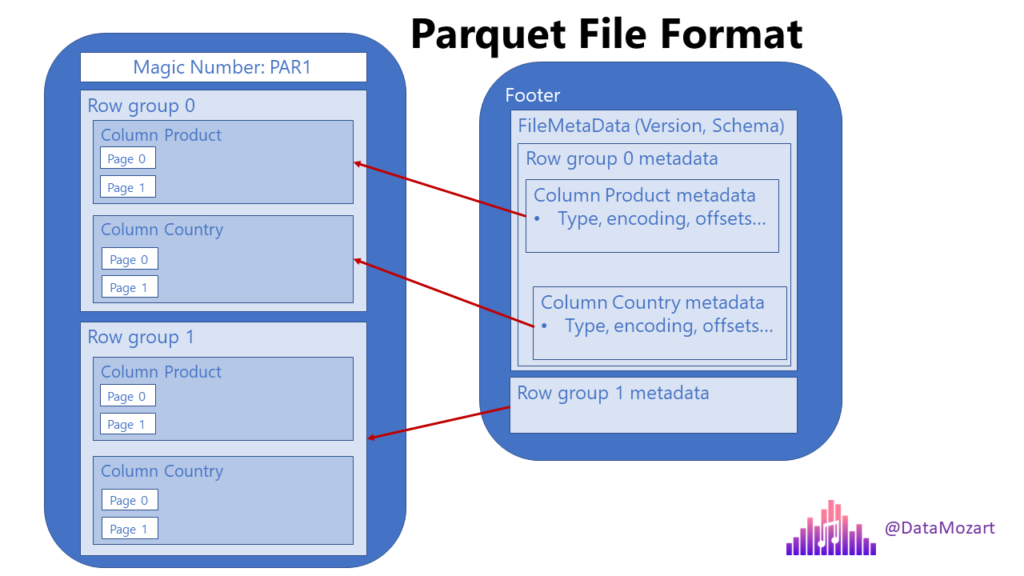

Parquet file format everything you need to know! Data Mozart

The solution is to disable the. Learn how to fix the error when reading decimal data in parquet format and writing to a delta table. You can try to check the data format of the id column. Spark will use native data types in parquet(whatever original data type was there in.parquet files) during runtime. Int32.” i tried to convert the.

Parquet file format everything you need to know! Data Mozart

If you have decimal type columns in your source data, you should disable the vectorized parquet reader. The solution is to disable the. Int32.” i tried to convert the. You can try to check the data format of the id column. When trying to update or display the dataframe, one of the parquet files is having some issue, parquet column.

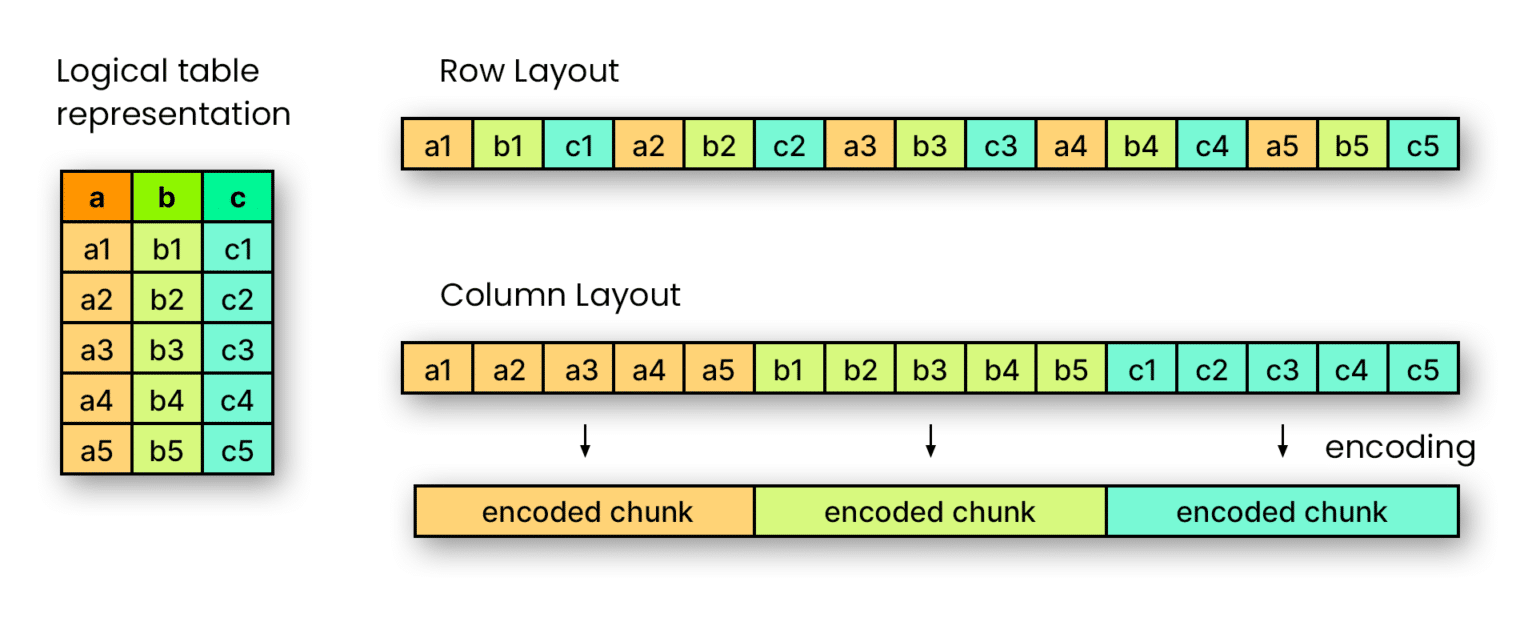

Why is Parquet format so popular? by Mori Medium

If you have decimal type columns in your source data, you should disable the vectorized parquet reader. When trying to update or display the dataframe, one of the parquet files is having some issue, parquet column cannot be converted. You can try to check the data format of the id column. Spark will use native data types in parquet(whatever original.

Big data file formats AVRO Parquet Optimized Row Columnar (ORC

I encountered the following error, “parquet column cannot be converted in file, pyspark expected string found: When trying to update or display the dataframe, one of the parquet files is having some issue, parquet column cannot be converted. Learn how to fix the error when reading decimal data in parquet format and writing to a delta table. You can try.

Understanding Apache Parquet Efficient Columnar Data Format

When trying to update or display the dataframe, one of the parquet files is having some issue, parquet column cannot be converted. Int32.” i tried to convert the. Learn how to fix the error when reading decimal data in parquet format and writing to a delta table. Spark will use native data types in parquet(whatever original data type was there.

Demystifying the use of the Parquet file format for time series SenX

Int32.” i tried to convert the. Learn how to fix the error when reading decimal data in parquet format and writing to a delta table. If you have decimal type columns in your source data, you should disable the vectorized parquet reader. When trying to update or display the dataframe, one of the parquet files is having some issue, parquet.

Parquet file format everything you need to know! Data Mozart

Int32.” i tried to convert the. Learn how to fix the error when reading decimal data in parquet format and writing to a delta table. The solution is to disable the. Spark will use native data types in parquet(whatever original data type was there in.parquet files) during runtime. When trying to update or display the dataframe, one of the parquet.

Spatial Parquet A Column File Format for Geospatial Data Lakes

I encountered the following error, “parquet column cannot be converted in file, pyspark expected string found: Spark will use native data types in parquet(whatever original data type was there in.parquet files) during runtime. The solution is to disable the. If you have decimal type columns in your source data, you should disable the vectorized parquet reader. You can try to.

Learn How To Fix The Error When Reading Decimal Data In Parquet Format And Writing To A Delta Table.

If you have decimal type columns in your source data, you should disable the vectorized parquet reader. When trying to update or display the dataframe, one of the parquet files is having some issue, parquet column cannot be converted. Spark will use native data types in parquet(whatever original data type was there in.parquet files) during runtime. I encountered the following error, “parquet column cannot be converted in file, pyspark expected string found:

You Can Try To Check The Data Format Of The Id Column.

Int32.” i tried to convert the. The solution is to disable the.